Jeffrey Yepez

Quantum computing labs

Overview of QC labs in experimental physics

This page describes three labs that I had founded for in-house experimental quantum computing studies. Since the early 1990's, my QC Group has pioneered the development of quantum computers for computational physics applications. My research has focused on a broad range of computational physics applications, with emphasis on designing suitable quantum algorithms (a theoretical physics effort), implementing and testing those algorithms via quantum simulation (quantum information processing and high performance computing efforts) and pushing the limits of quantum information processing technologies (experimental physics efforts).

From 1992 to 2006, my quantum computing experiments conducted at the Air Force Research Laboratory at Hanscom AFB in Bedford Massachusetts were supported by the Air Force Office of Scientific Research (AFOSR). My first research initiative in quantum computing was called the Quantum Computation for Physical Modeling (QCPM) initiative. Published proceedings of the QCPM workshops are listed here:

After transferring to the Air Force Research Laboratory in Kihei Hawaii in 2011, my experimental work in quantum computing was supported by the DoD High Performance Computing Modernization Program (HPCMP) Office, and I continued quantum computing research supported by AFOSR.

Analog and digital quantum computers

For quantum simulation purposes, two different approaches to quantum computation are typically considered. A quantum computer that maps the continuous quantum mechanical evolution of one microscopic system with an engineered system Hamiltonian to emulate the behavior of another quantum system may be termed an analog quantum computer or quantum emulator. A quantum computer that maps a discrete qubit array (a quantum circuit network) governed by an engineered unitary evolution to efficiently simulate the behavior of another quantum system may be termed a digital quantum computer or a Feynman quantum computer. At the inception of my experimental quantum computing work, I was fascinated by both approaches, and remain so to this day.

The quantum engineering technology explored in my BEC Lab was an example of using atomic and quantum optical methods to form and control a spinor Bose-Einstein condensate (BEC) of an ultracold quantum gas comprised of alkali bosonic atoms. This work was conducted for purpose of prototyping an analog quantum computer.

NMR quantum information processing was originally used to obtain the first experimental proof-of-concept of quantum information processing needed for the development of a Feynman quantum computer. The quantum engineering technology explored in my NMR Lab was an example of using spatial NMR spectroscopy to form and control a quantum psuedo-pure state of spin-1/2 atomic nuclei. This work explored the frontiers of measurement-based quantum information processing technology and was conducted for the purpose of prototyping an analog quantum computer.

Fine-grained parallel computing explored in my Dynamics Lab was an example of using reversible information processing methods to form and control an emergent Navier-Stokes fluid of a classical lattice gas comprised of fermionic bits. This work was conducted for the purpose of testing a Feynman quantum computer in its simplest application of simulating the behavior of a classical many-body system confined to a spacetime lattice. A Feynman quantum computer can efficiently simulate the behavior of a finite quantum many-body system, but it can also simulate the behavior of a finite classical many-body system. Lattice-gas information processing is time-reversible, a salient characteristic it shares with unitary quantum information processing. So a classical lattice gas is an archetype of a quantum lattice gas.

Type-I and type-II quantum computers

For quantum simulation and classical simulation purposes, I considered two different types of quantum computers. Let me define the second type of quantum computer first. A quantum computer that maps the continuous quantum mechanical evolution of one microscopic system with an engineered system Hamiltonian and with a restriction of its quantum entanglement being strictly local in space is termed a type-II quantum computer. In contradistinction, a quantum computer with an engineered system Hamiltonian, but without any restriction on the spatial extent of its quantum entanglement, is a type-I quantum computer.

If quantum entanglement localization in a type-II quantum computer is induced by continual state measurement, then the type-II quantum computer is a measurement-based quantum computer. Historically, type-II quantum algorithms were the first example of a measurement-based quantum computing algorithm that employs entangled cluster states. Quantum entanglement localization can occur naturally in a many-body quantum system, and this is a remarkable way to realize a type-II quantum computer.

A Feynman quantum computer is a prototypical type-I quantum computer, while an analog quantum computer based on a zero-temperature spinor Bose-Einstein condensate (BEC) is a type-II quantum computer -- and this occurs without any externally applied state measurement. So one reason I explored type-II quantum computers was the connection to BECs -- a form of quantum matter that occurs naturally (for example in the compressed matter in white dwarfs and neutron stars) and that can be produced in a table-top setup in a lab at a repetition rate of nearly one per second. Another reason for studying type-II quantum computers is that they are easier to analyze theoretically. It is for these reasons that (when I was a lead Air Force Quantum Computing Program Manager) I helped support the development of table-top BEC-QC prototypes. The description below of the BEC Lab is an example of developing ultracold quantum gas technology toward prototyping a type-II quantum computer. The description below of the NMR Lab is another example of developing quantum information processing technology for prototyping a type-II quantum computer.

A type-I quantum computer can be used to model strongly-correlated quantum mechanical systems while a type-II quantum computer can be readily used to model nonlinear classical systems (such as Navier-Stokes fluids) and quantum systems (such as for zero-temperature BEC superfluids). A spinor BEC superfluid is interesting from an applied mathematics viewpoint because it can behave as a single macroscopically coherent quantum particle whose equation of motion admits soliton solutions such as Skyrmions.

In summary, as a practical engineering design advantage, an analog quantum system can behave as a type-II quantum computer with only local quantum entanglement. Such a quantum system is isomorphic to a large array of localized quantum processors interconnected by communication channels whose data transfers are represented by permutation-based interchange operators (orthogonal operators).

Quantum computing lab facility descriptions

BEC Lab

The first cold atomic vapor BECs were realized by Cornell and Wieman at NIST and Ketterle at MIT in 1995. BEC's are a great help in getting to the bottom of the issue regarding quantum vortex dynamics leading to quantum turbulence, and vice versa the observation of vortices in a condensate also helps the experimentalist establish the fact that the BEC state was indeed created in the experiment. The first cold atomic vapor BEC was a rubidium-87 gas cooled to around 170 nK, and it essentially had no normal fluid component at all. It comprised a macroscopic number of integer spin particles in effectively a zero temperature ground state.

Rarefied atom vapors (dilute alkali gases of typically around 10^6 to 10^7 of Rb-87, Li-7 or Na-23 atoms) are laser-cooled in magneto-optical traps and trapped in optical lattices. BECs are a pristine realization of an inviscid superfluid. When the cold atoms are confined to spatial nodes of an optical three-dimensional lattice, these atomic quantum gases become experimental realizations of quantum lattice gases. In the way of further conceptual simplification, we may consider a quantum lattice gas to be a collection of qubits confined to a cubical grid where the qubits hop between grid points and exist in quantum mechanical superposition states spanning the whole grid. Alkali atoms placed in an optical lattice (the experimental equivalent of placing bits in a qubit array) may be used for quantum simulation of strongly correlated condensed matter systems. This scheme constitutes a practical program of analog quantum computation for physical modeling.

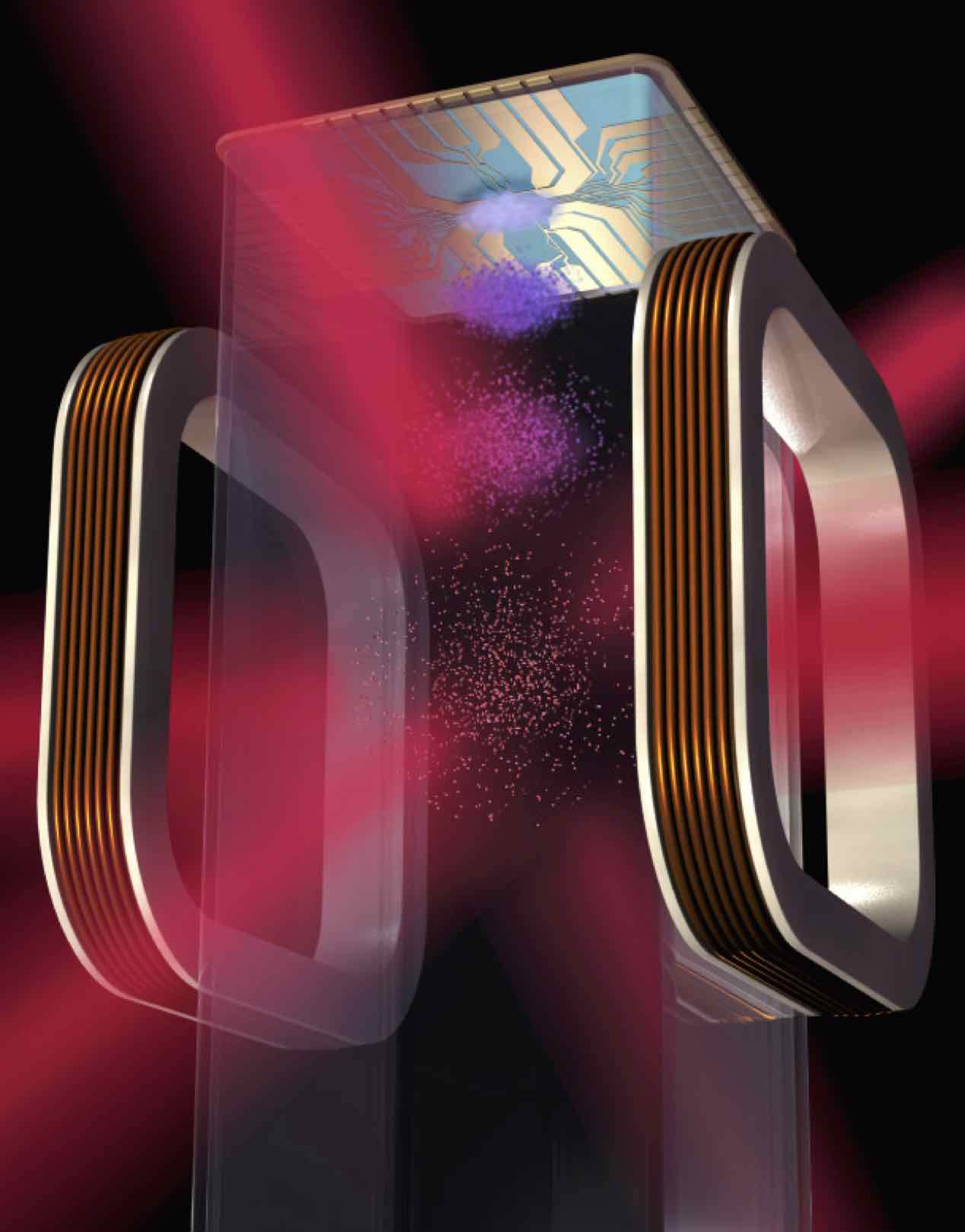

Two prototypes from ColdQuanta were acquired and installed by the Air Force Research Laboratory (AFRL) through the DOD HPCMP Quantum Computing Program in my BEC Lab. The first of the two prototypes, a commercial integrated analog quantum simulator, was delivered to UH Manoa on 21 April 2014 and the second was delivered by the end of fiscal year 2014, and just a few days later on 24 April 2014 a BEC was created in Hawaii. These two quantum devices use ultracold Bose-Einstein Condensate (BEC) quantum gas (Rubidium-87 atoms as qubits) to serve as the computational medium. Such BEC systems are used as quantum emulators when the BEC superfluid is trapped in an optical lattice system, a capability available within the ColdQuanta atom-chip vacuum cell assembly.

The deployment of this particular test equipment represented a milestone technology transition for AFRL/AFOSR. This technology constitutes a game-changing commercial-grade analog quantum simulator:

- These systems used analog quantum computing technology where qubits are atoms. The ultracold coherent quantum system behaves as a spinor BEC superfluid. Quantum control of the spinor BEC is produced by lasers and atom chip traps.

- The scientific objective was to develop BEC superfluid technology useful for the quantum lattice gas model of quantum computation. The objective was to study ultracold quantum gas spinor BECs confined in optical lattices. These test systems allowed me to evaluate state-of-the-art experiment quantum "hardware" technologies for future high-performance computing applications in computational physics. Spinor BEC superfluid confined to optical lattices represent a leading quantum technology for scalable analog quantum computing.

- Critical components of the ColdQuanta BEC system (e.g. Physics Station & Ultracold Matter Cell) were selected by NASA/Jet Propulsion Laboratory for a scientific mission on the International Space Station, BEC Lab (ultracold quantum gas zero-g 3D optical lattice).

- The BEC QC prototypes were transferred to the Space Vehicles Directorate of AFRL at the end of the summer of 2016.

NMR Lab

One early quantum technology for quantum information processing I explored was spatial nuclear magnetic resonance (NMR) spectroscopy. My NMR Lab at the Air Force Research Laboratory had a Bruker 500 UltraShield NMR spectrometer to prototype quantum information processing strategies toward realizing a Feynman quantum computer. An NMR spectrometer using spatial tomography can be used to test measurement-based quantum algorithms whose macroscopic scale behavior is classical yet highly nonlinear dynamics. The simplest example of nonlinear dynamics is described by the Burgers equation. The measurement-based quantum computing work was carried out in collaboration with Professor David Cory and his postdocs and graduate students at the MIT Nuclear Engineering Department. By the early 2000's, we had carried out simulations of quantum models in 1+1 dimensions and achieved the first numerical solution of the diffusion equation and of the Burgers equation, a milestone in spatial NMR quantum information processing.

The first NMR quantum information processing experiment constituted a one-dimensional array of qubits grouped in pairs (2 qubits per site). We used slice selection to partition a liquid sample of chloroform into distinguishable regions occupying about one picoliter of liquid each. The liquid-state NMR technique allowed us to realize a one-dimensional array of quantum processing nodes. This technique can be extended to handle two and three dimensional quantum networks.

Here are some group papers on spatial NMR quantum information processing:

- Towards a NMR implementation of a quantum lattice gas algorithm

- Experimental Demonstration of Quantum Lattice Gas Computation

- Multiple RF Coil Nuclear Magnetic Resonance Quantum Computing

- Simulation of the Burgers equation by NMR quantum-information processing

Dynamics Lab

In my Dynamics Lab, I explored particle-based methods for hydrodynamic fluid simulation and studied a variety of discrete many-body systems to model the macroscopic scale behavior of a many-body system of system of particles. A main goal of my computational physics research has been to develop a simulation methodology that can achieve much greater spacetime scales than the molecular dynamics simulation method for complex fluid systems.

I began this work by focusing on a method for modeling many-particle systems called the classical lattice gas method, which can be formulated at either a microscopic or mesoscopic spacetime scale. At the microscopic scale, the discrete particle dynamics can be either classical or quantum mechanical in nature. In classical lattice gases, each particle's occupancy of a local state is encoded by the Boolean value of a single digital bit.

Here are some of my papers on the classical lattice gas topic:

- Lattice-Gas Automata Fluids on Parallel Supercomputers

- Lattice Gas Dynamics, Volume I Viscous Fluids

- A Lattice-Gas with Long-Range Interactions Coupled to a Heat Bath

- Lattice-Gas Crystallization

- Integer lattice gases

Classical lattice gas simulations are suited to fine-grained massively parallel computers. Yet in the early 1990's there were no commercial-grade fine-grained parallel computers. So I joined in the Information Mechanics Group at the MIT Laboratory for Computer Science and collaborated with Dr. Norman Margolus and Prof. Tommaso Toffoli who were building a remarkable fine-grained parallel computer---a lattice gas machine called the CAM-8. Microscopic classical simulations run exceedingly well on a special-purpose single-instruction-multiple-data machine, such as the CAM-8 machine. I acquired two CAM-8 machines, a 128 million site CAM-8 prototype (the largest one ever built), to serve as experimental computing hardware for the Dynamics Lab. To this day I remain interested in microscopic implementations that are time reversible. Reversible classical algorithms that use reversible classical logic gates serve as a stepping stone to unitary quantum algorithms that use unitary quantum gates.